The Heart of AI: Intensifying Competition in the Semiconductor Market

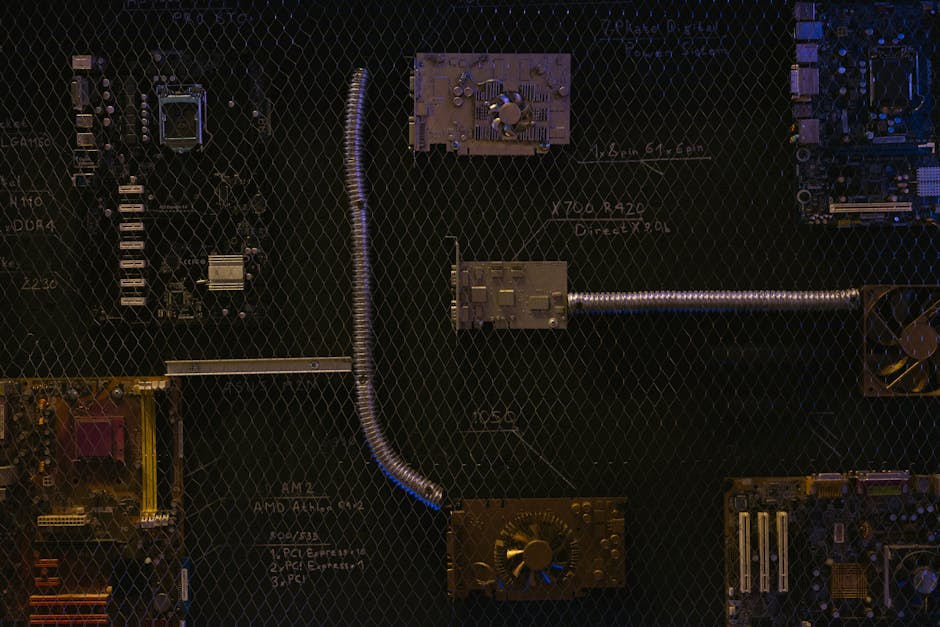

Every time I leverage AI tools to boost my productivity or unleash creative ideas, I’m reminded of the incredible hardware powering it all: AI semiconductors. This isn’t just about raw specs; it’s about the very brains of artificial intelligence. The market for these crucial components is a battleground, with established GPU giants holding significant sway, but cloud providers, startups, and even open-source initiatives all vying for a piece of the future. Who truly holds the cards to dominate the AI era?

NVIDIA’s Unshakeable Fortress: Is it Truly Invincible?

NVIDIA currently reigns supreme in the AI semiconductor space. They smartly identified the parallel processing capabilities of GPUs as ideal for deep learning and built a powerful moat with their CUDA software ecosystem, making it incredibly hard for developers to look elsewhere. From my own experience, when evaluating new AI models, NVIDIA GPU compatibility and performance are always top considerations. Their technological prowess and first-mover advantage make them seem unassailable for now. However, this very dominance creates an interesting paradox: high costs and architectural lock-in are potential vulnerabilities that competitors are eager to exploit. Can NVIDIA maintain its absolute leadership against a growing tide of challengers?

The Rise of Hyperscalers and Nimble Challengers

While NVIDIA continues to dominate the high-end GPU market, tech giants like Google with its TPUs, Amazon with Trainium/Inferentia, and Microsoft with Maia are investing heavily in their own custom AI chips. Their goal? To optimize performance and cost-efficiency for their vast data centers and reduce dependency on external vendors. From my perspective, this isn’t merely about cost savings; it’s a strategic move to control the innovation pace of their AI services. Moreover, in emerging areas like edge AI, numerous startups are developing specialized Neural Processing Units (NPUs) that offer low-power, high-efficiency solutions, shaking up the traditional landscape. While their market share is still small, these purpose-built chips signal a significant diversification in the future AI semiconductor market.

Beyond the Chip: Ecosystems, Software, and Strategic Partnerships

The AI semiconductor race isn’t just about who can build the fastest chip or cram the most cores onto a die. I believe that software ecosystems and strategic partnerships will ultimately determine the winners. Even the most powerful chip is useless without accessible frameworks, robust libraries, and a thriving developer community. To counter NVIDIA’s formidable CUDA barrier, competitors are pushing open-source alternatives like AMD’s ROCm and collaborative efforts like ONNX (backed by Microsoft and Meta) to attract developers. Furthermore, the strategy is shifting from simply selling chips to providing optimized solutions for specific industries and forging deep collaborations with AI model developers. Ultimately, whoever builds the broadest and most robust ‘AI ecosystem’ will shape the future.

A Future of Diversity, Not a Single Victor

The AI semiconductor market feels like the wild west – a gold rush attracting countless innovators and churning out new technologies and ideas. While NVIDIA will likely maintain a strong lead for some time, I predict a future where no single player will dominate everything. Custom chips from cloud providers, specialized NPUs for edge AI, and advancements in open-source hardware will converge to create a more fragmented and diverse market. The crucial factor will be which companies can provide the most optimized solutions for specific needs and build strong, trusted relationships with the developer community. We all need to pay close attention to the unfolding dynamics of this fascinating AI semiconductor showdown.

#AI semiconductors #NVIDIA #AI chip competition #future of AI #NPU